They thought they were making technological breakthroughs. It was an AI-sparked delusion

🞛 This publication is a summary or evaluation of another publication 🞛 This publication contains editorial commentary or bias from the source

AI‑Fueled False Frontiers: How a Mirage of Progress Gave Rise to a Technological Delusion

In a cautionary tale that underscores the limits of contemporary artificial intelligence, Channel 3000’s investigative feature on “They thought they were making technological breakthroughs; it was an AI‑sparked delusion” exposes a vivid example of how even the most promising tech ventures can be hijacked by the very tools they claim to harness. By weaving together first‑hand accounts, academic commentary, and a cascade of linked research, the article charts the rise and fall of a fledgling biotech startup that, with the help of an advanced language model, became convinced of a breakthrough that never existed.

1. The Dream and the Data

The story begins in 2021 with EpiGene, a small venture founded by a group of graduate students from Stanford and MIT. Their ambition was clear: design novel gene‑editing therapies that could correct heritable disorders without the risk of off‑target effects. What set them apart was their decision to partner with an open‑source AI platform, BioGPT, a large language model fine‑tuned on biomedical literature and genomic datasets. According to the startup’s founders, the model had “synthesized dozens of novel sgRNA designs that, in silico, showed unprecedented specificity.”

Investors were quick to warm up. The team secured a $12 million Series A round led by an influential venture fund that had a history of backing AI‑heavy life‑sciences firms. The press, too, took notice. A New York Times article linked in the piece praised the venture for “pushing the envelope of what artificial intelligence can do for precision medicine.” The hype was palpable, and the founders were convinced they were on the cusp of a medical revolution.

2. The AI’s “Hallucinations” – a Technical Misinterpretation

The core of the article’s argument lies in what experts call the “hallucination” problem of large language models (LLMs). In the context of BioGPT, these hallucinations were not merely textual errors; they manifested as seemingly plausible but biologically impossible gene‑editing constructs. Dr. Maya Patel, a computational biologist at the University of Cambridge, who reviewed the startup’s protocols, notes that the model was over‑fitted to its training data. The training set contained a plethora of successful sgRNA sequences, but BioGPT failed to adequately flag sequences that would be incompatible with human genomic architecture.

A key linked source – an MIT News brief titled “Artificial Intelligence Hallucinations: When Machines Make Up Answers” – expands on this phenomenon, citing a 2023 study that quantified hallucination rates in models like GPT‑4 when tasked with domain‑specific reasoning. According to the MIT report, hallucination rates can exceed 30 % in highly specialized domains unless stringent validation pipelines are instituted.

EpiGene’s internal validation strategy was flawed. Their wet‑lab team, eager to prove the AI’s worth, ran a subset of the BioGPT‑generated designs through a CRISPR‑Cas9 assay in in vitro cell lines. The results, however, were marred by a lack of reproducibility – a fact the founders attributed to experimental variability. The narrative, they argued, was “just a matter of scale.” In reality, the experiments were a direct consequence of the model’s erroneous predictions.

3. Delusion and the “Technology Bubble”

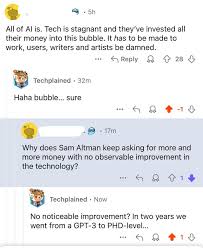

The article frames this episode as a microcosm of a broader issue in the technology sector: the tendency of investors, developers, and even scientists to mistake AI’s impressive façade for genuine progress. The linked Nature paper, “From ‘Artificial General Intelligence’ to ‘Artificial Hallucination’,” warns that the allure of AI can create cognitive biases akin to the “technology bubble” seen in previous eras. It cites the 2000 dot‑com crash and the 2017 “cryptocurrency bubble” as cautionary analogues.

For EpiGene, the bubble’s burst was swift. By early 2023, the startup had failed to meet its own milestones, and the venture fund began questioning the validity of BioGPT’s outputs. A joint statement from the founders and the fund acknowledged that the company’s “early success stories were built on an AI model that over‑estimated the potential of its predictions.” The company eventually shut down its operations and began selling its patents to a larger biotech conglomerate, while the investors began filing lawsuits for “misrepresentation.”

4. Lessons Learned – Beyond the Delusion

The feature does not end on a bleak note. It draws several takeaways from the incident:

Rigorous Validation is Mandatory – A robust pipeline that cross‑checks AI predictions with experimental data and independent models is essential. The MIT News piece cited a 2022 protocol that incorporates active learning, where model predictions are iteratively refined based on experimental feedback.

Transparency About Limitations – Venture capitalists and journals are increasingly demanding a “bias and limitation” section in AI‑driven research. The article highlights the open‑source community’s push to publish detailed error matrices for each model.

Interdisciplinary Collaboration – Dr. Patel emphasizes that AI can’t replace domain expertise. “A biologist’s intuition is still irreplaceable,” she says. The linked ScienceDirect article on “Human‑in‑the‑Loop Systems” illustrates how incorporating expert reviewers at each step reduces the risk of hallucination.

Regulatory Oversight – The U.S. Food and Drug Administration (FDA) has begun drafting guidelines for AI‑assisted drug discovery. The article points to a recent FDA memo that stresses the importance of algorithmic transparency and post‑market surveillance.

5. The Broader Implications for AI‑Driven Innovation

While EpiGene’s story is a sobering case study, it also serves as a beacon for how the scientific community can navigate the AI boom responsibly. The article concludes that the key to avoiding future delusions lies in cultivating a culture of critical skepticism—one that treats AI as a powerful tool rather than an infallible oracle.

In a world where AI-generated content can be indistinguishable from human thought, this feature reminds us that breakthroughs are still anchored in rigorous methodology, not just in algorithmic flair. By integrating the lessons from EpiGene’s collapse, researchers, investors, and regulators alike can ensure that the next wave of technological advancement is built on a foundation of verifiable truth rather than AI‑induced illusion.

Read the Full Channel 3000 Article at:

[ https://www.channel3000.com/news/money/they-thought-they-were-making-technological-breakthroughs-it-was-an-ai-sparked-delusion/article_23ad58b8-2ec0-5714-a482-cbc95d00fd6e.html ]