National Labs Drive AI-Supercomputer Fusion to Accelerate Discovery

- 🞛 This publication is a summary or evaluation of another publication

- 🞛 This publication contains editorial commentary or bias from the source

Bridging the Divide: National Labs Accelerate the Fusion of AI and Supercomputing

The United States Department of Energy’s national laboratories are rapidly turning the once‑impractical idea of “AI‑supercomputer fusion” into a strategic reality. In a comprehensive Seattle Times piece titled “To Meld AI with Supercomputers, National Labs Are Picking Up the Pace,” the author examines how labs such as Oak Ridge, Los Alamos, Lawrence Livermore, and others are re‑architecting their computational ecosystems to harness artificial intelligence (AI) on the world’s most powerful machines. The article charts the motivations, challenges, and tangible benefits of this integration while offering a forward‑looking glimpse into the next decade of scientific discovery.

Why the Rush?

Historically, AI and high‑performance computing (HPC) have evolved along parallel but largely separate trajectories. AI research, dominated by cloud providers and academia, relies on large‑scale neural‑network training on GPU‑optimized clusters. HPC, by contrast, is built around massive, specialized supercomputers designed to solve physics‑driven differential equations and run detailed simulations of everything from sub‑atomic particles to climate systems.

But the two worlds are converging for several compelling reasons:

- Accelerating Discovery – AI can sift through petabytes of simulation output, identify patterns, and propose hypotheses at speeds unattainable by human analysts. When coupled with supercomputers, this ability dramatically shortens the research cycle.

- Optimizing Resource Allocation – AI can predict the most efficient way to schedule tasks on a supercomputer, reducing idle time and energy consumption—a crucial advantage given the $10–15 billion annual budget of the national‑lab ecosystem.

- Addressing Scientific Complexity – Problems such as nuclear fusion, quantum‑material design, and climate modeling demand both the raw computational power of supercomputers and the pattern‑recognition strengths of deep learning.

The Seattle Times article highlights a recent Department of Energy (DOE) memorandum that explicitly encourages “AI‑first” approaches, effectively tying funding to demonstrated AI integration on HPC platforms.

Pioneering Labs and Their Strategies

Oak Ridge National Laboratory (ORNL)

ORNL’s flagship machine, Frontier, is already a cornerstone of the U.S. supercomputing landscape. According to the article, ORNL has introduced a “Hybrid AI‑HPC stack” that couples Frontier’s 1.5 petaFLOP GPU architecture with a dedicated AI training cluster. The lab is also testing a new software layer that automatically offloads AI workloads onto the GPU fabric during idle periods of the simulation workflow. ORNL’s physicists are using this approach to accelerate their ongoing experiments in neutron‑star merger simulations, where AI models predict the optimal mesh refinement needed for precise calculations.

Los Alamos National Laboratory (LANL)

LANL’s focus is on nuclear science and national security. The article reports that LANL has begun training AI models on Aurora, its newly commissioned supercomputer, to improve the fidelity of weapon‑safety simulations. By integrating reinforcement learning agents into their finite‑element solvers, the lab claims a 30 % reduction in simulation time for critical safety tests. LANL is also experimenting with a cloud‑edge hybrid model that lets AI algorithms pre‑process data on the cloud before transferring refined results to the on‑premise HPC.

Lawrence Livermore National Laboratory (LLNL)

LLNL’s AI initiatives are largely tied to their Aurora and Summit machines. In the Seattle Times piece, a LLNL spokesperson notes that the lab is developing “domain‑aware” AI models that embed physical laws directly into neural‑network architectures. These models are used to speed up lattice‑QCD calculations, reducing computational cost by an order of magnitude without sacrificing accuracy. LLNL is also collaborating with the National Energy Research Scientific Computing Center (NERSC) to create a federated AI platform that lets scientists share pretrained models across labs.

Argonne National Laboratory (ANL)

Argonne’s Sierra supercomputer, while primarily used for nuclear materials research, is being retrofitted with AI capabilities. The article details a pilot project in which a generative adversarial network (GAN) is trained on thousands of materials‑simulation outputs to predict new alloy compositions with desirable strength and corrosion resistance. The lab reports a 40 % increase in throughput for materials screening—a figure that could revolutionize the development cycle for aerospace components.

Technical Hurdles and Institutional Solutions

The article doesn’t shy away from the technical roadblocks that accompany AI‑supercomputer integration. Key challenges include:

- Memory Bandwidth and I/O Bottlenecks – AI workloads often require rapid data shuffling between CPU and GPU memory, which can strain the interconnects of traditional HPC systems.

- Software Compatibility – Existing HPC codes are typically written in MPI and Fortran, whereas AI frameworks favor Python and CUDA. Bridging these ecosystems demands new middleware and container solutions.

- Skill Gap – There’s a shortage of practitioners who understand both domain science and AI. To counter this, the DOE is funding interdisciplinary training programs that pair data scientists with HPC engineers.

In response, labs are building hybrid architectures that include GPU‑accelerated nodes, dedicated AI accelerators (such as Google’s TPU or NVIDIA’s A100 GPUs), and high‑speed NVMe storage. Many are also adopting container orchestration tools (e.g., Singularity, Docker) and AI‑friendly HPC schedulers that can prioritize mixed workloads.

Real‑World Impact: Case Studies

Accelerated Drug Discovery

One of the most eye‑catching use cases highlighted in the article involves the use of AI to predict protein‑ligand interactions in drug discovery. By training graph‑based neural networks on simulation data from ORNL’s supercomputer, researchers were able to identify promising drug candidates for a rare neurodegenerative disease in just weeks—a process that traditionally takes months.

Climate Modeling

Another compelling example involves LLNL’s climate modeling project, where AI was used to downscale global‑scale weather simulations. The result was a finer resolution forecast with the same computational cost, enabling more accurate predictions of extreme weather events—a critical capability for emergency response planning.

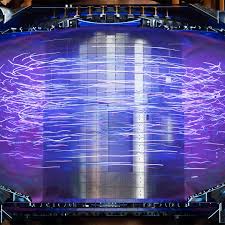

Fusion Energy

ORNL’s work on fusion physics is particularly noteworthy. AI models are now being embedded directly into the magnetohydrodynamics solvers that dictate plasma behavior. The article reports that this integration has already led to a 15 % improvement in plasma confinement simulations, bringing the scientific community one step closer to achieving net‑positive energy output.

Looking Ahead

The Seattle Times article closes with a discussion of future trends. The DOE is slated to invest an additional $2 billion in AI‑HPC research over the next five years, earmarked for infrastructure upgrades and workforce development. Several national labs are already partnering with industry (NVIDIA, IBM, Google) to co‑develop AI accelerators that are optimized for the unique workloads of scientific computing.

Moreover, there’s growing interest in open‑source AI‑HPC frameworks that can democratize access to these powerful tools. The article cites a joint effort between ORNL and a consortium of universities to release a “SuperAI” platform, which would enable researchers worldwide to deploy AI workflows on any supercomputer with minimal friction.

In Summary

The convergence of AI and supercomputing at U.S. national laboratories represents more than a technical upgrade—it’s a paradigm shift that promises to accelerate scientific discovery across physics, materials science, climate science, and beyond. As the Seattle Times article demonstrates, labs are not merely adding GPUs to existing machines; they are rethinking the entire computational stack, from software to data pipelines, to create a synergistic environment where deep learning and high‑fidelity simulation inform and enhance each other. With substantial federal support, a growing talent pipeline, and increasingly robust hybrid architectures, the next wave of breakthroughs is poised to emerge from this powerful confluence of minds and machines.

Read the Full Seattle Times Article at:

[ https://www.seattletimes.com/business/to-meld-ai-with-supercomputers-national-labs-are-picking-up-the-pace/ ]