Hyper-Realistic Face Swaps: The AI Technology Making Them Convincing

The Science Behind Hyper‑Realistic Face Swaps: Why Today's AI Looks So Convincing

The rapid rise of hyper‑realistic face swapping has taken the tech world—and the broader public—by storm. From viral TikTok clips to alarming political misinformation, the line between authentic and fabricated visual content is increasingly blurred. To understand why modern face‑swap videos look almost indistinguishable from reality, we need to dig into the underlying technology that powers them: a combination of face detection, deep learning models, and sophisticated post‑processing pipelines.

1. A Brief History of Face Swapping

Face swapping is not a new idea. Even before the age of artificial intelligence, Photoshop and other image‑editing tools allowed users to overlay one face onto another with varying degrees of realism. However, these early attempts relied on manual alignment and were limited by the resolution and lighting of the source images.

The true breakthrough came in the late 2010s with the advent of Generative Adversarial Networks (GANs), a deep‑learning architecture introduced by Goodfellow et al. in 2014. GANs consist of two neural networks—the generator and the discriminator—that play a min‑max game: the generator learns to create realistic images, while the discriminator learns to distinguish real images from generated ones. Over successive iterations, the generator produces increasingly convincing outputs. For face swapping, GANs can learn the subtle variations in facial geometry, texture, and expression, allowing them to render a target face onto a source face with remarkable fidelity.

2. The Core Pipeline

a. Face Detection & Landmark Extraction

Before a face can be swapped, the algorithm must first locate it in the image or video. Popular open‑source libraries such as MTCNN (Multi‑Task Cascaded Convolutional Networks) and Dlib perform rapid face detection and output a set of facial landmarks—eyes, nose, mouth corners, and jawline points. These landmarks serve as a scaffold for subsequent alignment.

b. Feature Embedding & Alignment

Once the landmarks are identified, the face is warped into a canonical pose. This normalization step ensures that both the source and target faces share a common coordinate system, simplifying the learning problem for the neural network. Libraries like OpenFace (https://cmusatyalab.github.io/openface/) can generate robust embeddings that capture identity‑related features, which are essential for preserving the donor’s likeness while maintaining the context of the original footage.

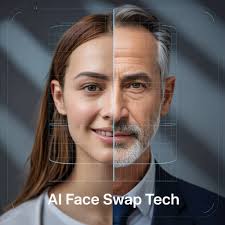

c. Autoencoders and Encoder‑Decoder Models

A typical face‑swap architecture employs an autoencoder—a neural network that learns to compress and then reconstruct an image. The encoder maps the source face into a latent vector; the decoder then reconstructs the target face, guided by the latent representation of the source. This approach allows the network to preserve subtle facial expressions and micro‑motions that contribute to realism.

d. Generative Adversarial Refinement

The initial reconstruction is usually refined using a GAN. The generator network takes the decoded face and produces a final image, while the discriminator evaluates its authenticity. Over many training iterations, the generator learns to produce outputs that not only match the donor’s identity but also blend seamlessly with the background, lighting, and camera motion of the source footage.

3. Real‑Time Performance and Hardware Acceleration

While early experiments were computationally intensive, recent frameworks—such as DeepFaceLab (https://github.com/iperov/DeepFaceLab) and FaceSwap (https://github.com/deepfakes/faceswap)—have optimized the training and inference pipelines for real‑time use. By leveraging GPU acceleration and TensorRT optimizations, these tools can process high‑definition video at frame rates suitable for streaming or live events.

4. Temporal Coherence and Video Consistency

A static, high‑resolution face swap can still look disjointed in a moving video if the algorithm treats each frame independently. Modern systems incorporate optical flow and temporal smoothing techniques to ensure that the swapped face moves coherently with the subject’s head pose and expression. Additionally, some pipelines use 3D face models to estimate depth and lighting, further reducing the risk of jitter or uncanny artifacts.

5. Detection, Counter‑Measures, and Ethical Implications

With great power comes great responsibility. The same technology that can create awe‑inspiring visual effects can also produce damaging misinformation. Researchers and industry groups have therefore invested heavily in deep‑fake detection. Models such as the ones introduced in the FaceForensics++ dataset (https://github.com/ondyari/FaceForensics) train classifiers to spot telltale signs of manipulation—e.g., subtle inconsistencies in eye blinking, facial symmetry, or micro‑texture noise.

Nevertheless, detection algorithms lag behind the pace of generation. A 2019 study (https://arxiv.org/abs/1809.00955) demonstrated that classifiers could detect synthetic faces only 70% of the time when faced with advanced GAN‑based swaps. This cat‑and‑mouse dynamic underscores the urgent need for policy frameworks that regulate the use of deep‑fake technology, especially in political or high‑stakes contexts.

6. The Future of Face Swapping

Looking ahead, several trends are shaping the next wave of hyper‑realistic face swapping:

Higher‑Resolution Synthesis: Models are moving beyond 512 × 512 to 4K and even 8K resolutions, thanks to improved GAN architectures such as StyleGAN2 and StyleGAN3.

Multimodal Integration: Future systems may fuse audio, motion capture, and depth sensors to produce fully immersive synthetic avatars.

Explainable AI: As the technology becomes mainstream, developers are exploring ways to make generation pipelines interpretable, enabling users to verify the provenance of content.

Regulatory Oversight: Governments and tech giants are exploring watermarking or digital signatures embedded during generation to preserve authenticity.

Conclusion

The convincing realism of today’s face swaps is the culmination of decades of research in computer vision, deep learning, and graphics. From robust face detection to sophisticated GANs and temporal coherence algorithms, each component works in concert to produce near‑perfect synthetic faces. While the technology offers exciting creative possibilities, it also poses profound ethical and societal challenges. Understanding the science behind hyper‑realistic face swapping is the first step toward responsibly harnessing and regulating this powerful tool.

Read the Full Impacts Article at:

[ https://techbullion.com/the-science-behind-hyper-realistic-face-swaps-why-todays-ai-looks-so-convincing/ ]